In this guide, I show you what content to include in the knowledge base (KB) versus the global and state-specific prompts when building multi-prompt voice AI agents.

The core objective is to make the voice agent cost-efficient (by enforcing token and latency budgets) without sacrificing its performance.

# Treat the global prompt as the “company playbook,” state prompts as “local playbooks,” and the knowledge base (KB) as the “filing cabinet.”

The global playbook holds rules and behaviours that apply to the agent in every state, state playbooks hold jurisdiction‑specific rules and language, and the filing cabinet stores long‑form reference documents and details agent look up when needed.

# Avoid using the knowledge base for Voice AI Agents, as it can considerably increase latency.

For real-time voice agents, retrieving from a knowledge base adds an extra step (query + embedding lookup + re-ranking) before the model can respond, which measurably increases latency, often enough for callers to notice.

One of the best practices for designing voice agents is to avoid using a knowledge base unless you truly need it.

Here is what I meant.

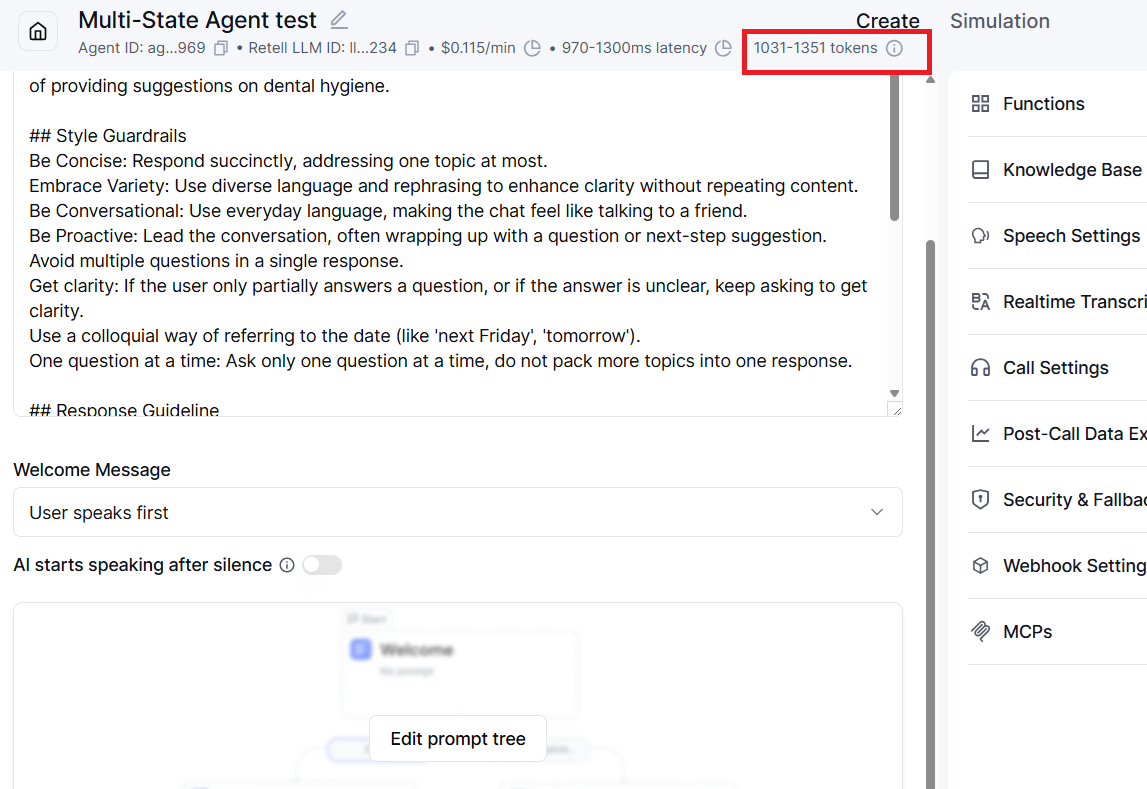

Before adding the KB to the voice agent: 1031-1351 tokens and ~1300 ms latency.

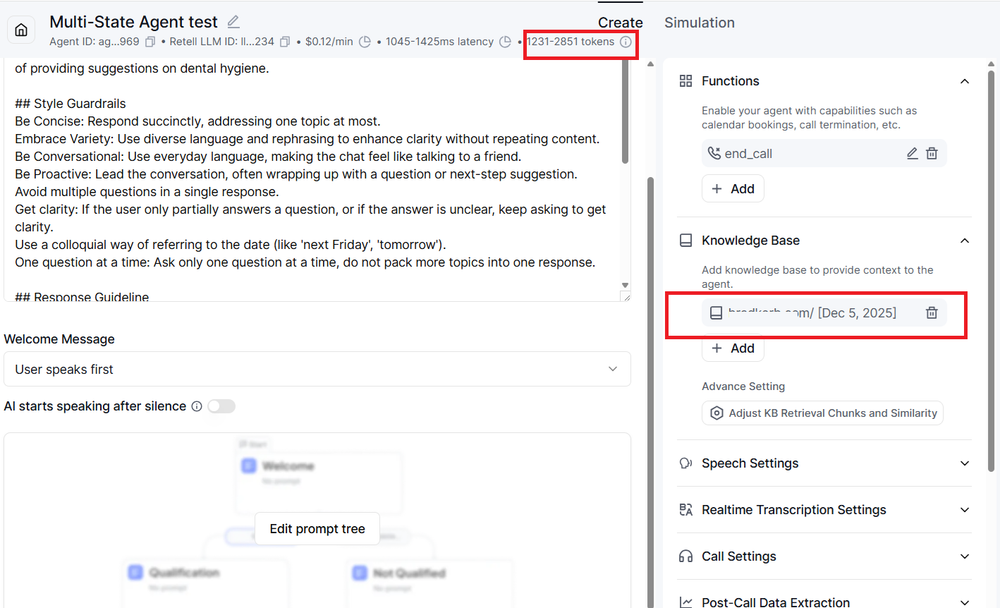

After adding the KB to the voice agent: 1231-2851 tokens and ~1425 ms latency.

Adding the knowledge base considerably increases both the token usage and latency of your voice agent.

Another problem is the RAG your agent uses. If the retrieval is not accurate, your voice agent will answer incorrectly.

Here are the main reasons to be very cautious with knowledge bases in voice agents:

#1 Increased latency per turn.

Every KB lookup adds a retrieval step (query + embedding search + re-ranking) and usually increases context size, which slows down model responses.

In real-time voice, even a few hundred milliseconds extra per turn is noticeable and harms the “instant” feel.

#2 Higher token usage and cost.

Retrieved chunks add tokens to the model input on every turn they’re used.

Large KBs or frequent KB hits push you closer to high token counts per minute, increasing LLM costs on top of audio costs.

#3 Risk of cross-jurisdiction / wrong-scope answers.

If a single agent sees KB content for multiple states or locations, retrieval can pull the wrong-state chunk (e.g., California policy for a Texas caller) unless scoping and metadata are carefully designed.

#4 Encourages “dumping ground” behaviour.

Companies often throw entire PDFs, websites, and policy packs into the KB “just in case.”

This:

- Bloats the index.

- Increases retrieval noise.

- Makes it harder to know what the agent is actually relying on.#

#5 Index changes can affect agent behaviour.

When you add, remove, or restructure KB documents, retrieval behaviour can shift without any change to prompts.

This makes behaviour less transparent and requires more disciplined release and testing processes.

As a result, many production voice systems treat the KB as an optional, last-resort layer rather than a default mechanism.