If your prompts don’t work, it’s usually because they are operationally vague.

They describe what you want conceptually, but not what the AI should do in concrete, operational terms.

To create an operationally sound prompt, you need to specify the parameters that define how something should look, behave, or be structured.

These are what I call domain-specific parameters.

Introduction to Domain-specific parameters.

Domain-specific parameters are adjustable settings that influence how an AI model interprets, structures, and executes a task within a particular field or context.

They serve as the operational variables that translate conceptual intent into precise, actionable instructions.

For example:

- In VFX or video generation, domain parameters include camera angles, lens types, lighting conditions, and motion paths.

- In analytics (GA4), they include metrics, dimensions, filters, and scopes that define how data is aggregated and analysed.

- In software development, parameters might involve function inputs, API endpoints, or configuration options that determine how code behaves.

Each domain has its own unique set of parameters, and understanding them is what allows you to guide AI systems effectively.

Without this domain expertise, prompts remain vague, and the outputs are underwhelming, inconsistent or generic.

Example of an operationally vague video prompt.

Following is an example of an operationally vague prompt in the context of AI video generation:A spaceship flying through a nebula, epic sci-fi scene.

This prompt describes a concept, not an operation. The AI understands the general idea but lacks the specific parameters needed to execute it effectively.

Example of an operationally sound video prompt.

Following is an example of an operationally sound prompt in the context of AI video generation:Establishing wide shot, 14mm lens, slow orbital dolly around a 2km-long asymmetrical dreadnought with exposed greebled engine arrays glowing cyan-white from ion thrusters. Ship passes through a turbulent magenta-blue nebula with particulate density 0.3, causing micro-lensing distortion on hull reflections. Practical hard rim light from off-screen binary star (5600K)…

This version gives the AI clear operational instructions, camera setup, lighting, colour temperature, motion, and environmental physics.

Domain Parameters for AI Video Generation.

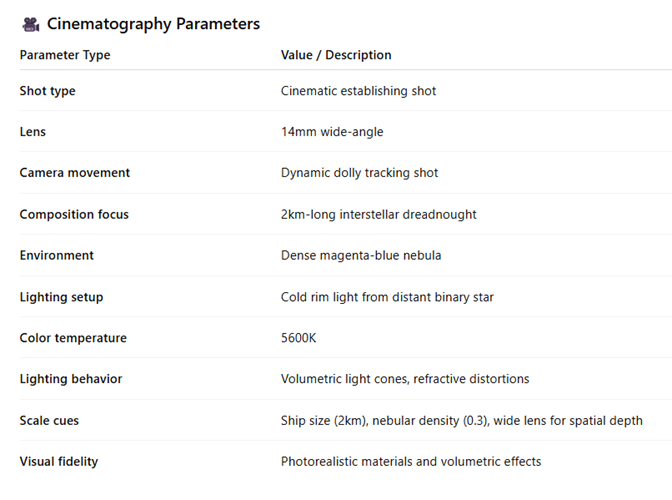

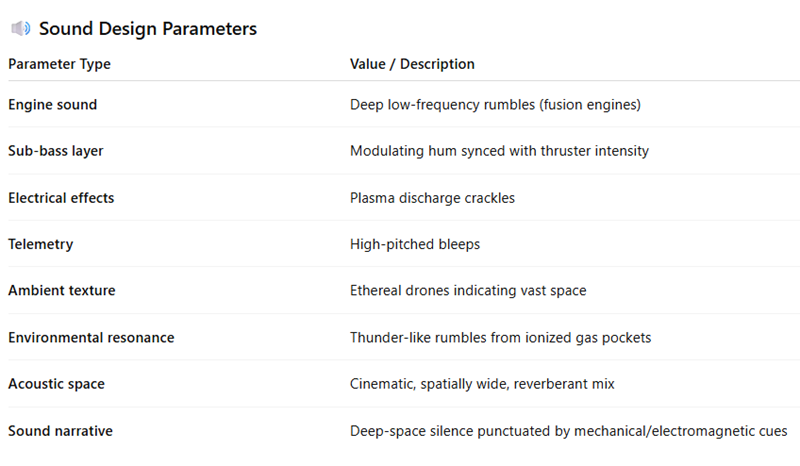

The following are examples of domain parameters used in the operationally sound prompt for AI video generation.

Understanding Video Parameters is key.

You need a good understanding of all of these parameters (and more) to create a compelling sci-fi video.

But without a background in VFX, you will struggle to create compelling sci-fi videos because you won’t know what these parameters mean, how to manipulate them and which parameters communicate your vision effectively to video diffusion models.

Without domain-specific parameters, diffusion models (like Runway or Pika) revert to generic interpretations, often producing underwhelming or incoherent results.

If you want full, precise control over video production, you need a strong understanding of all these video parameters.

The same logic applies to every modality.

Whether you’re generating images, text, or code, the principle is identical:

Without domain knowledge, your prompts lack the operational grounding needed to effectively guide the model.

Example of an operationally vague GA4 prompt.

Following is an example of an operationally vague prompt in the context of GA4:Show me sales trends.

Why it fails:

>> Doesn’t specify the GA4 metric (purchase_revenue, ecommerce_purchases, etc.).

>> No dimension to break trends by (time, region, device, campaign).

>> No scope (are we looking at event-level purchases, sessions, or user cohorts?).

>> No date range or filters.

Example of an operationally sound GA4 prompt.

Following is an example of an operationally sound prompt in the context of GA4:Using GA4 data, show me a month-over-month trend of purchase_revenue for the last 12 months, segmented by deviceCategory, and include only sessions where country equals "United States".

Why it works:

>> Names a specific metric: purchase_revenue.

>> Specifies a time dimension: month-over-month for 12 months.

>> Adds a breakdown dimension: deviceCategory.

>> Uses a filter: country = United States.

>> Context: Assumes GA4 event schema and naming conventions.

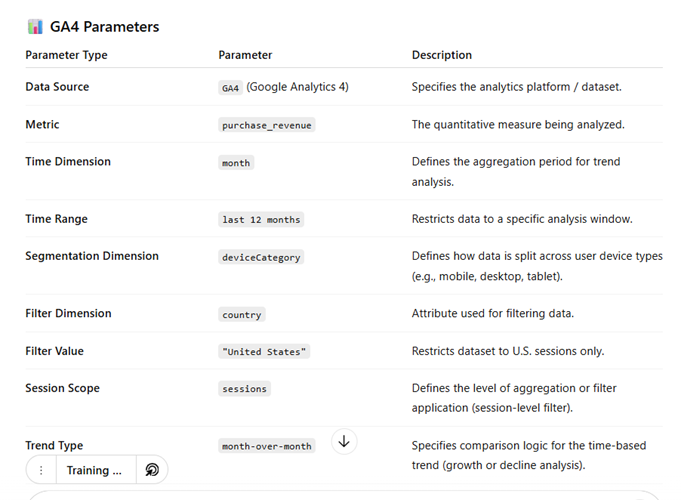

Domain parameters for querying GA4 data via AI chatbot.

The following are examples of domain parameters used in the operationally sound prompt for querying GA4 data via an AI chatbot.

Understanding GA4 Parameters is key.

You need a good understanding of all of these parameters (and more) to query GA4 data effectively via an AI chatbot.

In GA4’s case, if you don’t understand how events, parameters, dimensions, metrics, and scopes actually work together, you can’t even frame the right question, and the AI can’t produce a useful or actionable analysis in response.

Just as a VFX artist uses lens, lighting, and motion parameters to control a cinematic shot, a data analyst uses metrics, dimensions, filters, and scopes to define a precise analytical query.

The sample logic applies to GA4 BigQuery as well. If you don’t understand the GA4 BigQuery schema you can’t use AI to query the data correctly.

The same logic applies to n8n Integrations.

The same logic applies when you’re creating integrations using tools like n8n.

If you don’t understand the API endpoints, their methods, and the parameters they accept, you’ll struggle to build reliable automations or communicate your intent effectively to the workflow engine.

Domain expertise 'ALWAYS' comes first, Prompt Engineering second.

What you need first is domain expertise, the understanding of what the domain parameters mean and how they interact. Prompt engineering comes second, as the expression of that expertise in operational form.

You need an AI Agent Supervisor.

As organizations evolve toward AI-driven operations, two key roles will define the structure of future teams:- Agent Supervisors.

- Agent Developers.

Every department, from marketing to analytics, operations to finance, will eventually operate within this dual structure.

The Role of the Agent Supervisor.

The Agent Supervisor is the senior subject matter expert who provides the domain expertise necessary to make AI systems reliable and operationally sound.

They ensure that prompts reflect precise domain parameters, validate AI outputs against business logic, minimize hallucinations, and guide the strategic direction of AI deployment within their function.

Their role bridges human expertise and AI capability, ensuring that automated systems operate with accuracy, context, and accountability.

In essence, they provide the intellectual supervision that keeps AI aligned with real-world goals.

The Role of the Agent Developer.

The Agent Developer, by contrast, is the technical architect, responsible for building, maintaining, and optimizing AI agents.

They translate business logic, workflows, and domain knowledge into code, automation pipelines, and system integrations.

While the Agent Supervisor defines what the AI needs to understand and why, the Agent Developer determines how that understanding is implemented technically.

The two-person AI departments.

Like it or not, in most AI-integrated departments, Agent Supervisors and Agent Developers will be the core operational pair. Pick your role 👇

That said, as AI matures within organisations, additional roles will likely orbit around these two, not to replace them, but to complement and extend their impact.

In most future-facing businesses, two-person AI departments will be sufficient to operate entire business functions that today require dozens (or even hundreds) of people.

AI agents themselves become “staff,” executing routine workflows autonomously.

In essence:

- Domain expertise defines the parameters.

- Prompt engineering communicates them.

- Together, they make AI truly operational.

- Subject matter experts aren’t going anywhere. We will just need fewer of them.

Related Articles:

- How to Self Host n8n on Google Cloud - Tutorial.

- How to use APIs in n8n, GoHighLevel and other AI Automation Workflows.

- How to use Webhooks in n8n, GoHighLevel and other AI Automation Workflows.

- What is OpenRouter API and how to use it.

- How to Connect Google Analytics to n8n (step by step guide).

- How To Connect Google Analytics MCP Server to Claude.

- State Machine Architectures for Voice AI Agents.

- Using Twilio with Retell AI via SIP Trunking for Voice AI Agents.

- Retell Conversation Flow Agents - Best Agent Type for Voice AI?

- How to build Cost Efficient Voice AI Agent.

- When to Add Booking Functionality to Your Voice AI Agent.

- n8n Expressions Tutorial.

- n8n Guardrails Guide.

- Modularizing n8n Workflows - Build Smarter Workflows.

- How to sell on ChatGPT via Instant Checkout & ACP (Agentic Commerce Protocol).

- How to Build Reliable AI Workflows.

- Correct Way To Connect Retell AI MCP Server to Claude.

- How to setup Claude Code in VS Code Editor.

- How to use Claude Code Inside VS Code Editor.

- How To Connect n8n MCP Server to Claude.

- How to Connect GoHighLevel MCP Server to Claude.

- How to connect Supabase and Postgres to n8n.

- How to Connect WhatsApp account to n8n.

- How to make your AI Agent Time Aware.

- Structured Data in Voice AI: Stop Commas From Being Read Out Loud.

- How to build Voice AI Agent that handles interruptions.

- Error Handling in n8n Made Simple.

- How to Write Safer Rules for AI Agents.

- AI Default Assumptions: The Hidden Risk in Prompts.

- Why AI Agents lie and don't follow your instructions.

- Why You Need an AI Stack (Not Just ChatGPT).

- How to use OpenAI Agent Kit, Agent Builder?

- n8n AI Workflow Builder And Its Alternatives.

- Two-way syncs in automation workflows can be dangerous.

- Missing Context Breaks AI Agent Development.

- How To Avoid Billing Disputes With AI Automation Clients.

- ChatGPT prompt to summarize YouTube video.

- Avoid the Overengineering Trap in AI Automation Development.