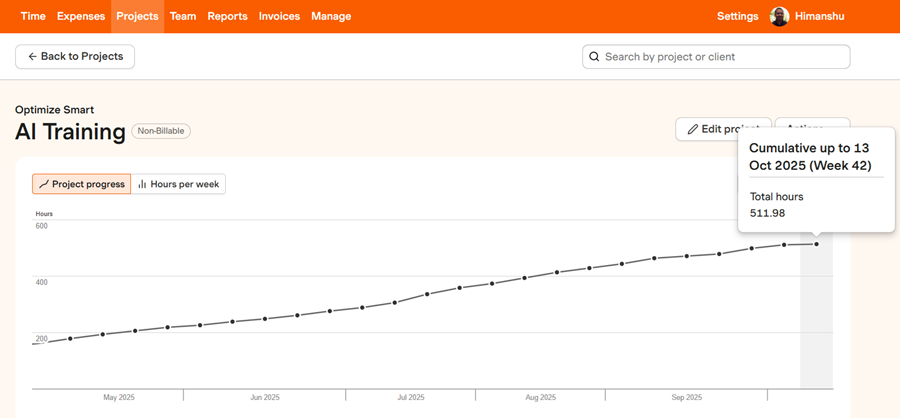

I’ve been tracking my time for over a decade using a time-tracking tool called 'Hravest'.

So naturally, I also log the hours I spend learning about AI, and as of today, it’s close to 512 hours.

Somewhere around the 300-hour mark, something shifted.

That’s when things started to click. You begin to connect the dots.

You start to understand how the moving parts of AI automation fit together, and suddenly, the big picture starts to appear.

Before that, it’s mostly chaos. You’re watching random YouTube videos, trying out new tools, jumping between tutorials, and nothing seems to make complete sense.

Then, one day, it does.

After spending over 500 hours learning, experimenting, and failing with AI, these are the lessons that truly stuck with me.

#1 The AI Learning curve is steep and never ending.

“AI” is an enormous field, spanning machine learning, prompting, workflows, domain expertise, AI agents, APIs, webhooks, Voice AI, RAG, and more.

At first, you might not feel the need to understand all of it. But once you start building AI agents, that changes fast.

Suddenly, you need to learn a specific tool or concept just to move forward, and your quick weekend project turns into weeks or months of digging into infrastructure, integrations, and architecture.

Building a production-ready AI agent demands far more than prompting skills.

You need to understand multiple platforms, handle orchestration and state management, and master concepts like RAG and prompt engineering.

Days turn into weeks and months, and you realize how much there still is to learn.

#2 Selling AI solutions is even more challenging than learning them.

Selling AI today feels like the early days of the dot-com era, when convincing businesses they needed a website was a challenge.

Many decision-makers still view AI as either science fiction or a simple chatbot, rather than as a flexible, integrable, and autonomous AI agent capable of performing complex, multi-step tasks.

Businesses have established (and often complex) workflows.

The introduction of a new, autonomous system, such as an AI agent, raises immediate concerns about data security, compatibility, and workflow disruption.

The gap between technological capabilities and business adoption remains wide.

We’re early in this journey. The tech is evolving far faster than its adoption, especially outside of personal or small-scale use.

#3 The most important AI Skill is Prompt Engineering.

All else being equal, the biggest performance gap between two AI agents usually comes down to one thing: the quality of their system prompts.

You can give two people access to the exact same model and tools, yet one agent delivers precise, context-aware responses while the other struggles with confusion and inconsistency.

Take two customer support agents built on the same LLM:

Agent A: Uses a generic prompt: “You are a helpful assistant.”

Agent B: Uses a carefully crafted prompt defining tone, reasoning limits, escalation rules, and knowledge boundaries.

The difference is night and day. Agent B feels intelligent, reliable, and on-brand. Agent A sounds robotic and inconsistent.

After 18 months of hands-on work in this space, my conclusion hasn’t changed: prompt engineering remains the single most important skill in AI.

It’s not just a technical skill, it’s a creative discipline.

The system prompt is the foundation that determines how effectively an AI system performs in the real world.

#4 Prompt Engineering requires domain expertise.

Effective prompt engineering depends on domain expertise. Knowing what to ask and how to ask it comes from understanding the field itself.

AI tools don’t magically fill in the blanks when your question is vague, incomplete, or based on incorrect assumptions.

Take Google Analytics, for example: if you don’t understand how events, parameters, dimensions, metrics, and scopes work, you can’t even frame the right question for AI to return something useful.

The quality of the input fundamentally limits the quality of the output.Vague Input: A non-expert might ask, "How many people visited my site?"

Expert Input: A domain expert knows to ask, "How many distinct users with at least one session in the last 30 days completed the 'purchase' event where the 'traffic_source' parameter was 'Google Organic'?"

An expert can immediately look at the AI's output and recognise if it violates industry standards, legal constraints, or known operational limits.

The AI can generate a perfect-sounding answer that is factually or practically wrong; the expert is the final quality check.

No matter how advanced AI becomes, if you can’t clearly express your needs in operational terms, you won’t get reliable results.

The primary goal of utilising an AI agent in a business workflow is not typically to obtain an answer. It's to trigger an action (e.g., generating code, classifying an email, updating a CRM field).

Domain expertise is needed to translate a high-level goal ("Improve lead quality") into the necessary operational steps an AI agent can execute:

High-Level Goal: Improve lead quality.

Operational Prompt (Expert):

"Analyze the sentiment of the last five customer interactions stored in the Intercom_Log field.

Based on the rules defined in SLA_Doc_v3, assign a score from 1-10 and update the Lead_Priority field in Salesforce with the corresponding classification (A, B, or C).

If the score is below 4, flag the lead for manual review."

In short,

AI doesn’t replace domain expertise; it amplifies it.#5 Prompts are proprietary data.

Your prompts are your proprietary data. Don’t give them away for free.

They capture your reasoning process, domain expertise, and problem-solving patterns. In many cases, your prompts are more valuable than the tools you use.

Anyone can access the same AI models, but your prompts, the way you structure context, define behaviour, and guide outputs are what make your agent unique.

Treat them like intellectual property. Protect them, refine them, and build on them.

#6 Most of the AI workflows you see online are demos.

Most of the AI workflows you see online are demos, not real end-to-end automations.

When you build production-grade systems for businesses, there’s usually one small component that’s easy to showcase without revealing the full solution, and that’s the part you see on social media.

These demo workflows look polished in videos or screenshots, but they rarely deliver commercial value. They’re just one visible piece of a much larger system.

The “Shiny Part” vs. the “Hard Part”

The shiny part is almost always the generative step, the AI creating text, code, or images. It’s the easiest to film and the most impressive to a general audience.

The hard part, the part that actually creates business value, is everything around that:

- Authentication & Security: Securely connecting to systems like Salesforce, HubSpot, or SAP.

- Data Ingestion & Cleaning: Handling legacy formats, duplicates, and messy datasets.

- State Management & Persistence: Tracking progress across multiple steps and ensuring data is saved even if a process fails midway.

- Error Handling & Fallbacks: Managing CRM timeouts, API errors, or LLM hallucinations with structured recovery or human handoff.

Without CRM integration, webhooks, APIs, or databases, the workflow remains a digital sandbox, an impressive trick, but not a tool that moves the bottom line.

To transition from a great demo to a valuable solution, builders must spend 80% of their effort on the unglamorous, integrated scaffolding that enables the AI’s 20% “shiny part” to actually interact with the real world.

#7 n8n is just the engine. Your business still needs the car.

When I first started learning tools like n8n, I assumed that mastering it would allow me to build complete AI automation workflows for businesses.

It took me a couple of months to realise that what I was really learning was how to build the engine, not the entire vehicle.

That’s also what you typically see on social media: influencers showcasing n8n workflows and giving the impression that’s all there is to AI automation.

In reality, n8n is just the engine. It powers the automation, but it doesn’t provide the client-facing interface.

Clients don’t interact with n8n directly. They interact through a website form, phone call, or mobile app.

Their data flows into a CRM or database, which then triggers your n8n workflow for further processing. Once the automation completes, the results are passed back to the original system.

Think of it this way:

- Your website, app, or CRM is the car.

- n8n is the engine that makes it move.

Without the rest of the infrastructure, the body, wheels, and steering, the engine alone won’t get you anywhere.

#8 Building your AI stack is more important than chasing new tools.

Just like you built your marketing or analytics stack, you need to build your AI stack and then commit to it.

Jumping from one AI tool to the next, or switching between LLMs every few weeks, only slows your progress.

For example, I stick with ChatGPT models for reasoning and n8n for workflow automation. This consistency allows me to delve deeper, optimise, and actually build reliable systems.

If you keep chasing shiny objects, you’ll never master anything.

You don’t need to know every new tool that hits the market; what you need is a tight, reliable stack that grows with you and compounds your expertise over time.

#9 Automation without CRM integration has no commercial value.

If your automation workflows don’t send and receive data from your CRM, they hold little commercial value.

Without CRM integration, every automated task operates in isolation.

You might be sending emails, logging calls, or pushing invoices, but if these actions aren’t connected to a unified customer record, you lose visibility into the complete customer journey.

A true business automation system depends on a centralised context, the ability to see and act on a single customer profile across channels.

Without that integration, your automation isn’t a system; it’s just a collection of disconnected tasks.

#10 It’s never about workflows, it’s about AI infrastructure.

Initially, your goal may be to create and deploy a single AI agent workflow as quickly as possible.

But it doesn’t take long to realise that a single workflow is just a demo, impressive in isolation, but offering little commercial value.

Real value emerges when multiple workflows, databases, integrations, and human oversight come together into a reliable, connected system that supports a business end-to-end.

What begins as a small automation project almost always evolves into building and ultimately selling AI infrastructure.

Most people underestimate that reality at the start. You think you’re building a workflow, but you’re actually designing the foundation of a scalable system.

#11 Too much reliance on LLMs weakens your automation.

It’s ironic, considering they’re called AI agents, but the more your systems depend on LLMs, the less reliable they tend to be.

As long as LLMs continue to hallucinate, they can’t match the consistency of well-tested code blocks, specialised third-party tools, or human oversight.

Every time you make an LLM, the critical point of failure in your workflow, you trade robustness for flexibility.

To build reliable systems, reduce your reliance on LLMs and prompts alone and anchor your agents in structured logic, deterministic tools, and clear fallback mechanisms.

#12 The future isn’t “no-code AI”.

Because you didn’t build the logic, you don’t control it. It’s as simple as that.

When something breaks, you have no idea what’s happening under the hood and good luck customising it beyond what the AI thinks you meant.

Platforms like Opal, Base44, and Lovable are built around natural-language orchestration, not explicit logic definition.

For example, Opal lets you build apps “that chain prompts, models, and tools, all using simple natural language and visual editing.”

That sounds powerful until you realize there’s no visible code map showing how your data moves or transforms. It’s all hidden behind model interpretation.

The system might behave correctly 90% of the time, but when it misinterprets your intent, there’s no surface to debug, no logic to inspect or modify.

Unless you can clearly describe your intent in operational terms, the exact data, logic, and dependencies, your prompts will eventually fail.

Even Base44’s own documentation warns: “Be clear and specific… a little context goes a long way.”

That’s just another way of saying: you still need domain expertise.

#13 You can’t optimize for AI-generated responses.

Large Language Models (LLMs) don’t retrieve or rank results; they generate them.

Each response is a probabilistic composition, created from statistical associations within the model’s training data rather than fetched from a fixed index or ranked list.

This means the process is stochastic, not deterministic. Outputs are dynamic, context-dependent, and can vary even with identical prompts.

When SEOs claim they can “optimize” for AI-generated responses, they’re repeating an old cognitive error, mistaking correlation for causation.

If an AI occasionally echoes your phrasing or references your website, that doesn’t mean your optimization influenced it. It’s almost always a stochastic coincidence, an incidental overlap emerging from how probabilistic models assemble text.

If you need more convincing: You Can’t Optimise For AI-generated Responses.

#14 The future of work: Agent developers and Agent supervisors.

Most companies of the future will revolve around two core roles: Agent Developers and Agent Supervisors.

Over time, every department will likely operate within this structure.

Agent Supervisors will be senior subject-matter experts responsible for overseeing the performance, reliability, and strategic alignment of AI agents.

Their role will include validating AI outputs, minimising hallucinations, and ensuring that the AI’s work aligns with brand, business, and compliance standards.

For example, a CMO could act as the Agent Supervisor for one or more marketing agents, guiding them to produce campaigns that stay on-brand and are strategically aligned with company goals.

Agent Developers, on the other hand, will build, maintain, and optimise these agents, translating business logic and domain knowledge into operational code, APIs, and workflows.

In this model, traditional junior roles may disappear.

Repetitive, execution-heavy tasks will be handled by AI agents, leaving only a few experts to supervise and a few developers to build.

That means no large, siloed teams for SEO, analytics, or marketing; instead, functional leads act as Supervisors, supported by Developers.

Even traditional HR departments may shrink as organisations move toward smaller, highly specialised teams, where human expertise drives strategy, and AI handles execution.

The critical hand-off gap.

The most fragile point in this structure is the hand-off between the Supervisor (the business or domain expert) and the Developer (the technical expert).

The translation problem.How well can a CMO articulate “brand voice” or “strategic intent” as quantifiable metrics and logical frameworks that an Agent Developer can translate into code?

This translation gap is currently bridged by intermediate roles, such as product owners, project managers, and business analysts, who interpret business strategy into technical requirements.

Hybrid roles.

In the emerging model, we may see the rise of a new, highly paid hybrid role, the Prompt Engineer/Business Logic Analyst, someone specialised in translating strategic ambiguity into AI-operable instructions.

This role combines meta-prompting, workflow design, and domain understanding, serving as the connective tissue between Supervisors and Developers.

#15 The AI disruption will hit faster than anyone expects.

The AI collapse in the job and agency markets won’t be gradual; it’ll be sudden, a free fall.

If a single voice AI agent can handle twenty calls simultaneously, that’s twenty human roles replaced instantly. If that’s not a sudden free fall, what is?

When it hits, there won’t be time to upskill; that window is already closing fast.

An entire generation of AI developers is preparing to take over, building systems that can scale infinitely and operate without fatigue, breaks, or overhead.

The best time to learn AI is now, not tomorrow.

Every day you delay puts you further behind, forcing you to play catch-up in a world that’s accelerating faster than most people realise.

Related Articles:

- How to Self Host n8n on Google Cloud - Tutorial.

- How to use APIs in n8n, GoHighLevel and other AI Automation Workflows.

- How to use Webhooks in n8n, GoHighLevel and other AI Automation Workflows.

- What is OpenRouter API and how to use it.

- How to Connect Google Analytics to n8n (step by step guide).

- How To Connect Google Analytics MCP Server to Claude.

- State Machine Architectures for Voice AI Agents.

- Using Twilio with Retell AI via SIP Trunking for Voice AI Agents.

- Retell Conversation Flow Agents - Best Agent Type for Voice AI?

- How to build Cost Efficient Voice AI Agent.

- When to Add Booking Functionality to Your Voice AI Agent.

- n8n Expressions Tutorial.

- n8n Guardrails Guide.

- Modularizing n8n Workflows - Build Smarter Workflows.

- How to sell on ChatGPT via Instant Checkout & ACP (Agentic Commerce Protocol).

- How to Build Reliable AI Workflows.

- Correct Way To Connect Retell AI MCP Server to Claude.

- How to setup Claude Code in VS Code Editor.

- How to use Claude Code Inside VS Code Editor.

- How To Connect n8n MCP Server to Claude.

- How to Connect GoHighLevel MCP Server to Claude.

- How to connect Supabase and Postgres to n8n.

- How to Connect WhatsApp account to n8n.

- How to make your AI Agent Time Aware.

- Structured Data in Voice AI: Stop Commas From Being Read Out Loud.

- How to build Voice AI Agent that handles interruptions.

- Error Handling in n8n Made Simple.

- How to Write Safer Rules for AI Agents.

- AI Default Assumptions: The Hidden Risk in Prompts.

- Why AI Agents lie and don't follow your instructions.

- Why You Need an AI Stack (Not Just ChatGPT).

- How to use OpenAI Agent Kit, Agent Builder?

- n8n AI Workflow Builder And Its Alternatives.

- Two-way syncs in automation workflows can be dangerous.

- Missing Context Breaks AI Agent Development.

- How To Avoid Billing Disputes With AI Automation Clients.

- ChatGPT prompt to summarize YouTube video.

- Avoid the Overengineering Trap in AI Automation Development.