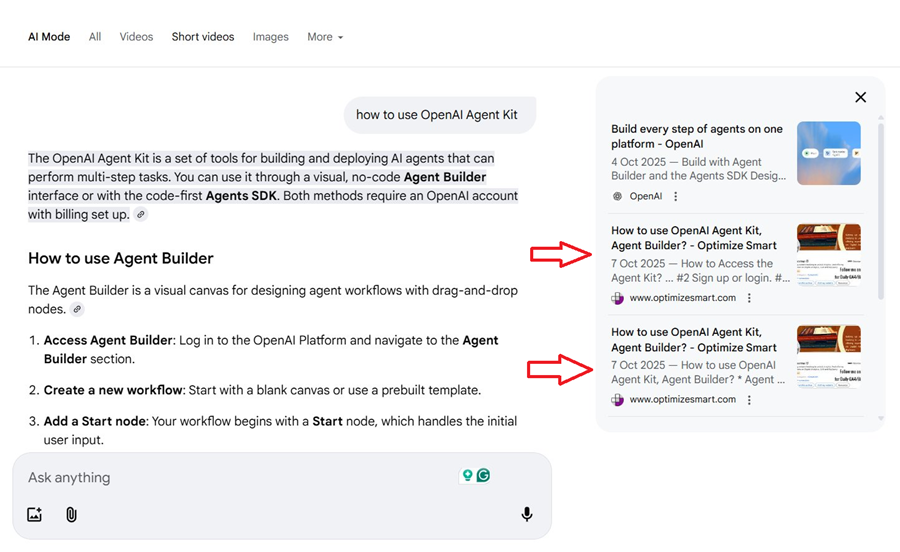

Why does my article appear in AI-generated results?

My article appears in AI-generated responses because it is currently more comprehensive and easier to understand than the existing OpenAI documentation, not because I used any special “AIO” or “GEO” technique.

Its inclusion happens naturally due to relevance and completeness, not through manipulation or secret optimization.

Much of what appears as AI-generated explanation elsewhere is, in fact, derived from my article’s content.

That’s all there is to it. I haven’t taken any special steps to influence these listings, and there’s no guarantee that my article will continue to appear in them tomorrow.

I have no control over what AI systems choose to generate.The core misunderstanding: Correlation vs. Causation.

Many in the SEO field are misinterpreting how generative AI works. The fundamental error is confusing correlation with causation.

Traditional SEO developed around identifying observable patterns, such as “pages with X in the title rank better,” and inferring causation from them.

That method worked when search engines were deterministic systems governed by measurable ranking factors.

Generative AI changes the game.

Generative AI has revolutionised the way information is produced and delivered.

Large Language Models (LLMs) do not retrieve or rank results; they generate them.

Each AI response is a probabilistic composition, newly created from statistical associations in the model’s training data, rather than being fetched from a fixed index or ranked list.

This means that the process behind AI responses is not deterministic but stochastic. Outputs are dynamic, context-dependent, and may vary even with identical prompts.

Optimising for AI is a fallacy.

When SEOs claim they can “optimize” for AI-generated responses, they are repeating the same cognitive error, mistaking correlation for causation.

If an AI occasionally echoes your phrasing or references your website, that does not mean your optimization caused it. It’s almost always a stochastic coincidence, an incidental overlap caused by how probabilistic models assemble text.

Even ChatGPT itself has explained this misconception:

“SEOs think ‘AIO’ works because they misread stochastic coincidence as causal feedback. But you can’t optimize for probabilistic emergence, only for factual, attributable relevance that humans (and eventually models) recognize.”

Understanding how LLMs actually work.

Generative systems are not search engines. You cannot optimize for a probability distribution.

LLMs generate text based on conditional probabilities, producing outputs dynamically during inference.

There is no fixed index, no stable ranking order, and no static set of ranking factors. Instead, LLM outputs are shaped by learned statistical associations across vast datasets.

In practical terms:

- The same query can yield different responses across sessions.

- There are no consistent “ranking signals” to target.

- Generation depends on statistical likelihoods, not metadata or markup.

The illusion of ranking in AI responses.

SEOs often assume optimization still works because AI outputs appear to rank or prioritize certain information. But that appearance is deceptive.

It’s a linguistic artefact of how AI structures text, not evidence of an underlying ranking algorithm.

Generative systems don’t score or sort results; they predict the most contextually likely continuation of text. What appears to be ranking is merely narrative ordering, not algorithmic preference.

What actually matters for AI visibility.

The only sustainable way to influence how AI models represent your work is by creating original, verifiable, and frequently cited content. Content that earns recognition from both humans and authoritative sources has a higher chance of being referenced accurately by AI systems.

This isn’t optimization in the traditional sense, it’s credibility building.

The illusion of AIO or GEO optimization.

“GEO” or “AIO” isn’t true optimization. It’s the illusion of control over randomness.

Generative AI doesn’t reward tweaks, keywords, or structural tricks. It reflects substance, clarity, and the informational integrity of the content it has seen.

True influence in the age of generative AI stems from authenticity and verified value, rather than manipulating patterns that no longer exist.

Related Articles:

- How to Self Host n8n on Google Cloud - Tutorial.

- How to use APIs in n8n, GoHighLevel and other AI Automation Workflows.

- How to use Webhooks in n8n, GoHighLevel and other AI Automation Workflows.

- What is OpenRouter API and how to use it.

- How to Connect Google Analytics to n8n (step by step guide).

- How To Connect Google Analytics MCP Server to Claude.

- State Machine Architectures for Voice AI Agents.

- Using Twilio with Retell AI via SIP Trunking for Voice AI Agents.

- Retell Conversation Flow Agents - Best Agent Type for Voice AI?

- How to build Cost Efficient Voice AI Agent.

- When to Add Booking Functionality to Your Voice AI Agent.

- n8n Expressions Tutorial.

- n8n Guardrails Guide.

- Modularizing n8n Workflows - Build Smarter Workflows.

- How to sell on ChatGPT via Instant Checkout & ACP (Agentic Commerce Protocol).

- How to Build Reliable AI Workflows.

- Correct Way To Connect Retell AI MCP Server to Claude.

- How to setup Claude Code in VS Code Editor.

- How to use Claude Code Inside VS Code Editor.

- How To Connect n8n MCP Server to Claude.

- How to Connect GoHighLevel MCP Server to Claude.

- How to connect Supabase and Postgres to n8n.

- How to Connect WhatsApp account to n8n.

- How to make your AI Agent Time Aware.

- Structured Data in Voice AI: Stop Commas From Being Read Out Loud.

- How to build Voice AI Agent that handles interruptions.

- Error Handling in n8n Made Simple.

- How to Write Safer Rules for AI Agents.

- AI Default Assumptions: The Hidden Risk in Prompts.

- Why AI Agents lie and don't follow your instructions.

- Why You Need an AI Stack (Not Just ChatGPT).

- How to use OpenAI Agent Kit, Agent Builder?

- n8n AI Workflow Builder And Its Alternatives.

- Two-way syncs in automation workflows can be dangerous.

- Missing Context Breaks AI Agent Development.

- How To Avoid Billing Disputes With AI Automation Clients.

- ChatGPT prompt to summarize YouTube video.

- Avoid the Overengineering Trap in AI Automation Development.